Lecture Notes 1: Introduction to Artificial Intelligence

Lecture Notes 1: Introduction to Artificial Intelligence (AI)

Main Reference

Artificial Intelligence: A Modern Approach, Global Edition (Stuart J. Russell and Peter Norvig)

Definitions

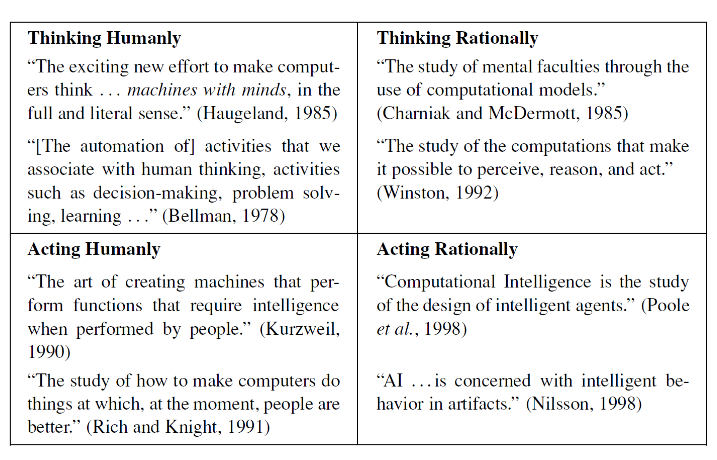

The definition of AI can be seen in the perspective of human vs rational, thought vs behaviour

Figure 1. Definition of Artificial Intelligence based on thinking and acting (Salameh, 2017)

Acting Humanly

In order to act like human, the computer should have these capability:

- Natural Language Processing

- Knowledge Representation

- Automated Reasoning

- Machine Learning

Above should pass the turing test, for totally act as human the AI should also pass the total turing test with capability:

- Computer Vision and Speech Recognition

- Robotics

Thinking Humanly

In order to make AI think like human, we must know how human think. Some aspect that we as human have capability in thinking are:

- Introspection

- Psychological Experiments

- Brain Imaging

Thinking Rationally

The plausible thinking can be seen as a part of intelligence. The main discussion is emphasized on the correct inferences. The study includes:

- Logic

- Probability

Acting Rationally

The agent is a main component in building AI systems that could act. The rationale agent then to gain the best decision that agent could infer. In uncertainty environment, the agent should achieve the best expected ouotcome.

“On the other hand, there are ways of acting rationally that cannot be said to involve inference. For example, recoiling from a hot stove is a reflex action that is usually more successful than a slower action taken after careful deliberation.” (Russell and Norvig, 2022)

- Rationale Agent Approach

Dicussion

The standard model above for defining AI is a benficial guide to construct AI agent. However, it may be not ideal in the long run. The main reason is we fully specified the main objectives to the agent.

- Value Alignment Problem

“there is an easy fix for an incorrectly specified objective: reset the system, fix the objective, and try again. As the field progresses towards increasingly capable intelligent systems that are deployed in the real world, this approach is no longer viable. A system deployed with an incorrect objective will have negative consequences. Moreover, the more intelligent the system, the more negative the consequences.” (Russell and Norvig, 2022)

- In the context of unproblematic problem of chess, how about if the agent act beyond the confines of the chessboard? Potentially inducing bad behaviour, of course.

“These behaviors are not “unintelligent” or “insane”; they are a logical consequence of defining winning as the sole objective for the machine.” (Russell and Norvig, 2022)

Then, is the standard model with fixed objective enough? Human-in-the-loop? lifelong learning for understanding the central objective of human?

Fondations of AI

Philosophy

- Can formal rules be used to draw valid conclusions?

Aristotle (384–322 BCE): Informal system of syllogism

- How does the mind arise from a physical brain?

One say that the mind is the physical system itself.

René Descartes (1596–1650): the distinction between mind and matter, dualism.

Alternative to dualism: materialism. The brain’s operation accord- ing to the laws of physics constitutes the mind.

- Where does knowledge come from?

Francis Bacon’s (1561–1626): empiricism movement. “Nothing is in the understanding, which was not first in the senses.”

David Hume’s (1711–1776): induction. “general rules are cquired by exposure to repeated associations between their elements”

- How does knowledge lead to action?

“Aristotle argued (in De Motu Animalium) that actions are justified by a logical connection between goals and knowledge of the action’s outcome”

“But how does it happen that thinking is sometimes accompanied by action and sometimes not, sometimes by motion, and sometimes not? It looks as if almost the same thing happens as in the case of reasoning and making inferences about unchanging objects. But in that case the end is a speculative proposition . . . whereas here the conclusion which results from the two premises is an action. . . . I need covering; a cloak is a covering. I need a cloak. What I need, I have to make; I need a cloak. I have to make a cloak. And the conclusion, the “I have to make a cloak,” is an action.” (Aristotle)

Jeremy Bentham (1823) and John Stuart Mill (1863): utilitarianism (what do you think with this idea?)

Immanuel Kant (785): deontological ethics

“doing the right thing is determined not by outcomes but by universal social laws that govern allowable actions, such as don’t lie or don’t kill.”.

Mathematics

- What are the formal rules to draw valid conclusions?

Formal Logic

- How do we reason with uncertain information?

Pobability: generalizing logic in uncertain situation. Gerolamo Cardano (1501–1576): first farmed idea of probability. Thomas Bayes (1702–1761): Probability based on the new evidence.

- What can be computed?

Algorithm (Muhammad ibn Musa al-Khwarizmi)

First-order logic

tractability, intractable

Economics

- How should we make decisions in accordance with our preferences?

Utility theory

Decision theory: merit between probability theory and utility theory

- How should we do this when others may not go along?

Multi-agent systems

- How should we do this when the payoff may be far in the future?

Markov Decision process

Reinforcement Learning

Neuroscience

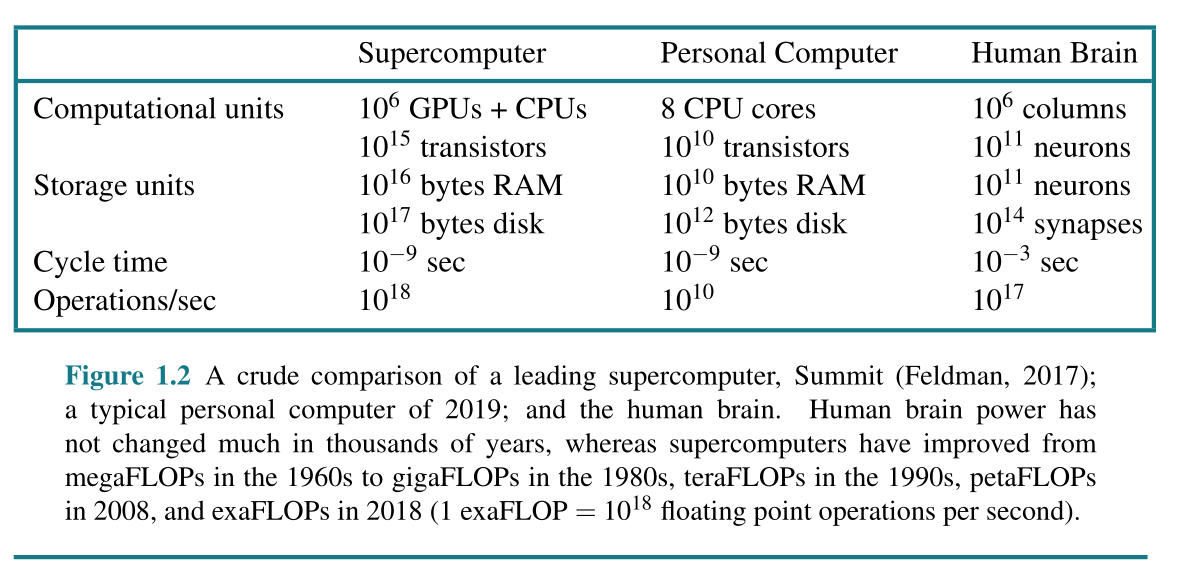

How do brains process information?

Source: https://www.simplypsychology.org/wp-content/uploads/synapse-1024x387.png

(Russell and Norvig, 2022)

Psychology

- How do humans and animals think and act?

John Watson (1878–1958): behaviorism

Cognivitve Psychology

Human-Computer Interaction

Computer Engineering

- How can we build an efficient computer?

Classical Computing Moore’s law: “performance doubled every 18 months or so until around 2005 when power dissipation problems led manufacturers to start multiplying the number of CPU cores rather than the clock speed.”

GPU and TPU

Quantum Computing

Linguistics

How does language relate to thought?

Computational Linguistics/Natural Language Processing

History of AI

- The inception of artificial intelligence (1943–1956)

- Early enthusiasm, great expectations (1952–1969)

- A dose of reality (1966–1973)

- Expert systems (1969–1986)

- The return of neural networks (1986–present)

- Probabilistic reasoning and machine learning (1987–present)

- Big data (2001–present)

- Deep learning (2011–present)

State-of-the-art of AI

- Large Language Model

- Open-World Lifelong Artificial Intelligence

- Open-World Lifelong Learning for Biodiverstiy

- Open-World Lifelong Learning for Chatbot

Risks

Risks:

- Lethal autonomous weapons

- Surveillance and persuasion

- Biased decision making

- Impact on employment

- Safety-critical applications

- Cybersecurity

“Turing’s warning: it is not obvious that we can control machines that are more intelligent than us.”

References

- Russell, S.J. and Norvig P., 2022, Artificial Intelligence: A Modern Approach, Global Edition

- Salameh A., 2017, Artificial Intelligence as a Commons – Opportunities and Challenges for Society